How It Works#

dataPi is designed to simplify the process of creating and managing data pods for distributed datalakehouse systems. Here’s an overview of how dataPi works:

Core Concepts#

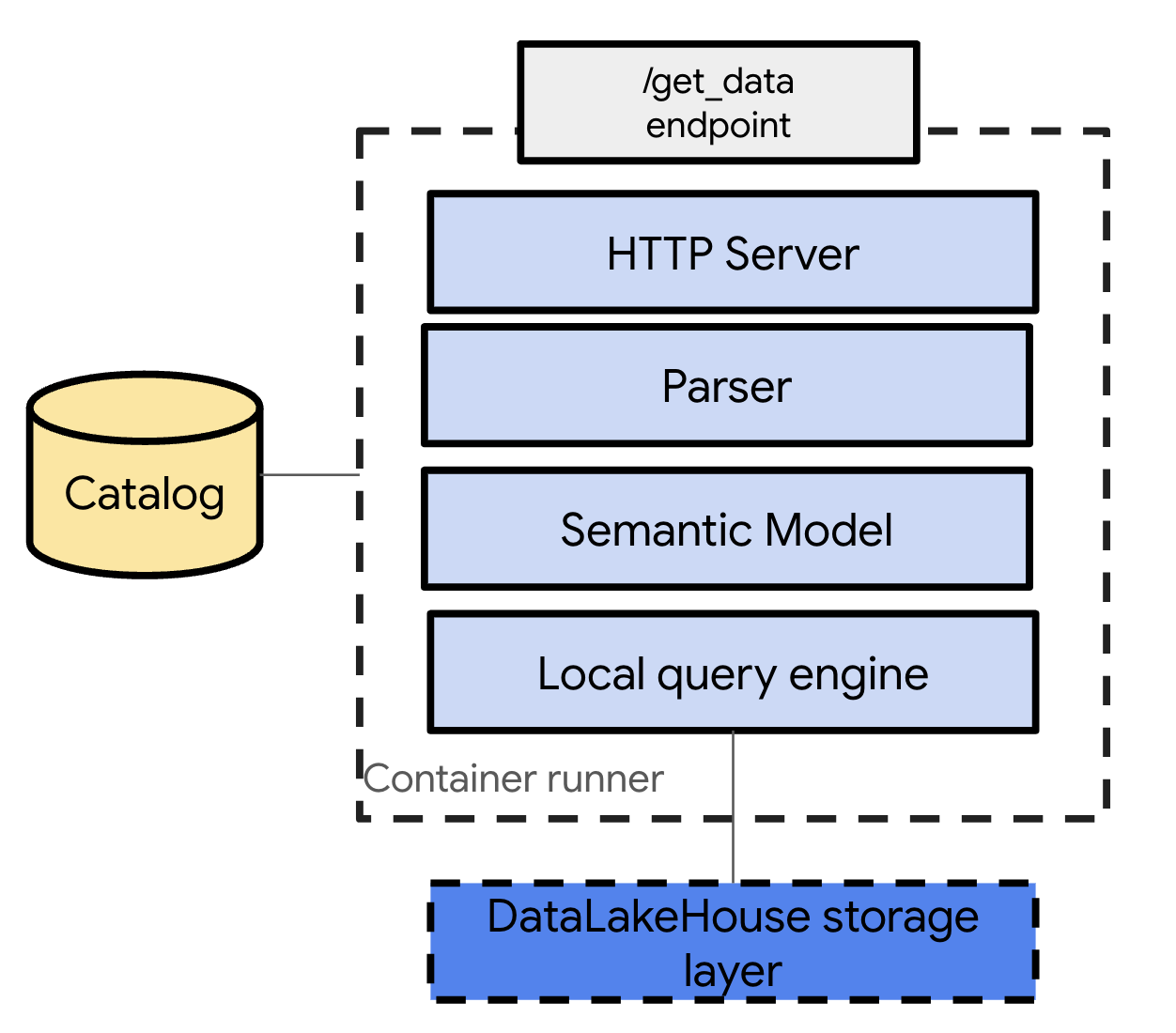

dataPods: Container-based deployable units that contain a local engine to resolve queries, specified as resources in a YAML file.

Iceberg DataLakehouse: Cloud storage based DataLake with Iceberg files.

Metastore Integration: dataPods interact with a metastore to locate and access data.

Workflow#

Query Specification: Developers define their query requirements (e.g., sales aggregated by quarter where region is EMEA) in a YAML file.

dataPod Creation: When

datapi runis executed, it creates a dataPod based on the YAML specification.API Exposure: Each dataPod exposes an API REST endpoint.

Query Execution: When the API is called, the dataPod:

Asks the metastore for the data location

Checks if permissions are in place

Retrieves the data

Executes the query locally within the container

Sends the data back to the application

This approach allows for efficient query resolution without calling the central DataPlatform engine.

Supported Technologies#

dataPi is built to work with various data platform technologies:

Lakehouse data format: Apache Iceberg

Cloud Storage: GCS, AWS S3, and Microsoft ADLS

Metastore: Apache Polaris

dataPod deployment target: Google Cloud Run

dataPod build service: Google Cloud Build

Query Sources#

Currently, dataPi supports Iceberg tables as query sources.

dataPod Types#

dataPi supports two types of dataPods:

Projection dataPods: - Support the

selectandfiltersquery operators.Reduction dataPods: - Support the

aggregate,group_by, andfiltersquery operators.

Next Steps#

To learn how to set up and use dataPi in your project, proceed to the Getting Started with dataPi guide.